A tutorial on using WebPageTest to identify and troubleshoot design instability issues.

In a previous post I wrote about measure cumulative design change (CLS) in WebPageTest. CLS is an aggregation of all design changes, so in this post I thought it would be interesting to go deep and inspect each individual design change on a page to try to understand what might be causing the instability and really try to fix the problem (s ).

Measurement of design changes

Usando la API de Layout Inestabilidad, podemos obtener una lista de todos los eventos de cambio de diseño en una página:

new Promise(resolve => {

new PerformanceObserver(list => {

resolve(list.getEntries().filter(entry => !entry.hadRecentInput));

}).observe({type: "layout-shift", buffered: true});

}).then(console.log);This produces a series of design changes that are not preceded by input events:

[

{

"name": "",

"entryType": "layout-shift",

"startTime": 210.78500000294298,

"duration": 0,

"value": 0.0001045969445437389,

"hadRecentInput": false,

"lastInputTime": 0

}

]In this example, there was a single very small change from 0.01% at 210ms.

Knowing the time and severity of the change is useful to help narrow down what may have caused the change. Let's go back to WebPageTest for a lab environment to perform further testing.

Measurement of layout changes in WebPageTest

De manera similar a medir CLS en WebPageTest, medir cambios de diseño individuales requerirá una métrica personalizada. Afortunadamente, el proceso es más fácil ahora que Chrome 77 es estable. La API de Layout Inestabilidad está habilitada de forma predeterminada, por lo que debería poder ejecutar ese fragmento JS en cualquier sitio Web dentro de Chrome 77 y obtener resultados de inmediato. En WebPageTest, puede usar el browser Chrome predeterminado y no tener que preocuparse por los indicadores de la línea de comandos o usar Canary.

So let's modify that script to produce a custom metric for WebPageTest:

[LayoutShifts]

return new Promise(resolve => {

new PerformanceObserver(list => {

resolve(JSON.stringify(list.getEntries().filter(entry => !entry.hadRecentInput)));

}).observe({type: "layout-shift", buffered: true});

});The promise in this script resolves to a JSON representation of the array instead of the array itself. This is because custom metrics can only produce primitive data types such as strings or numbers.

The website I will use for the test is ismyhostfastyet.com, a site I created to compare the load performance of web servers in the real world.

Identify the causes of design instability

At results we can see that the custom metric LayoutShifts has this value:

[

{

"name": "",

"entryType": "layout-shift",

"startTime": 3087.2349999990547,

"duration": 0,

"value": 0.3422101449275362,

"hadRecentInput": false,

"lastInputTime": 0

}

]In short, there is a unique design change from the 34.2% to 3087ms. To help identify the culprit, let's use WebPageTest's filmstrip view.

Scrolling to the ~ 3 second mark on the filmstrip shows us exactly what is causing the 34%'s design change: the colorful table. The website asynchronously retrieves a JSON file and then converts it to a table. The table is initially empty, so waiting to fill it when the results are loaded is causing the change.

But that is not all. When the page visually completes in ~ 4.3 seconds, we can see that the <h1> de la página «¿Mi anfitrión ya es rápido?» aparece de la nada. Esto sucede porque el sitio usa una fuente web y no ha tomado ningún paso para optimize la representación. En realidad, el diseño no parece cambiar cuando esto sucede, pero sigue siendo una mala experiencia para el Username tener que esperar tanto tiempo para leer el título.

Fix design instability

Ahora que sabemos que la tabla generada de forma asincrónica está provocando que un tercio de la ventana gráfica se desplace, es hora de solucionarlo. No conocemos el contents de la tabla hasta que se cargan los resultados JSON, pero aún podemos completar la tabla con algún tipo de placeholder data para que el diseño en sí be relativamente estable cuando se renderiza el DOM.

Here is the code to generate placeholder data:

function getRandomFiller(maxLength) {

var filler = '█';

var len = Math.ceil(Math.random() * maxLength);

return new Array(len).fill(filler).join('');

}function getRandomDistribution() {

var fast = Math.random();

var avg = (1 - fast) * Math.random();

var slow = 1 - (fast + avg);

return [fast, avg, slow];

}

window.data = [];

for (var i = 0; i < 36; i++) {

var [fast, avg, slow] = getRandomDistribution();

window.data.push({

platform: getRandomFiller(10),

client: getRandomFiller(5),

n: getRandomFiller(1),

fast,

avg,

slow

});

}

updateResultsTable(sortResults(window.data, 'fast'));

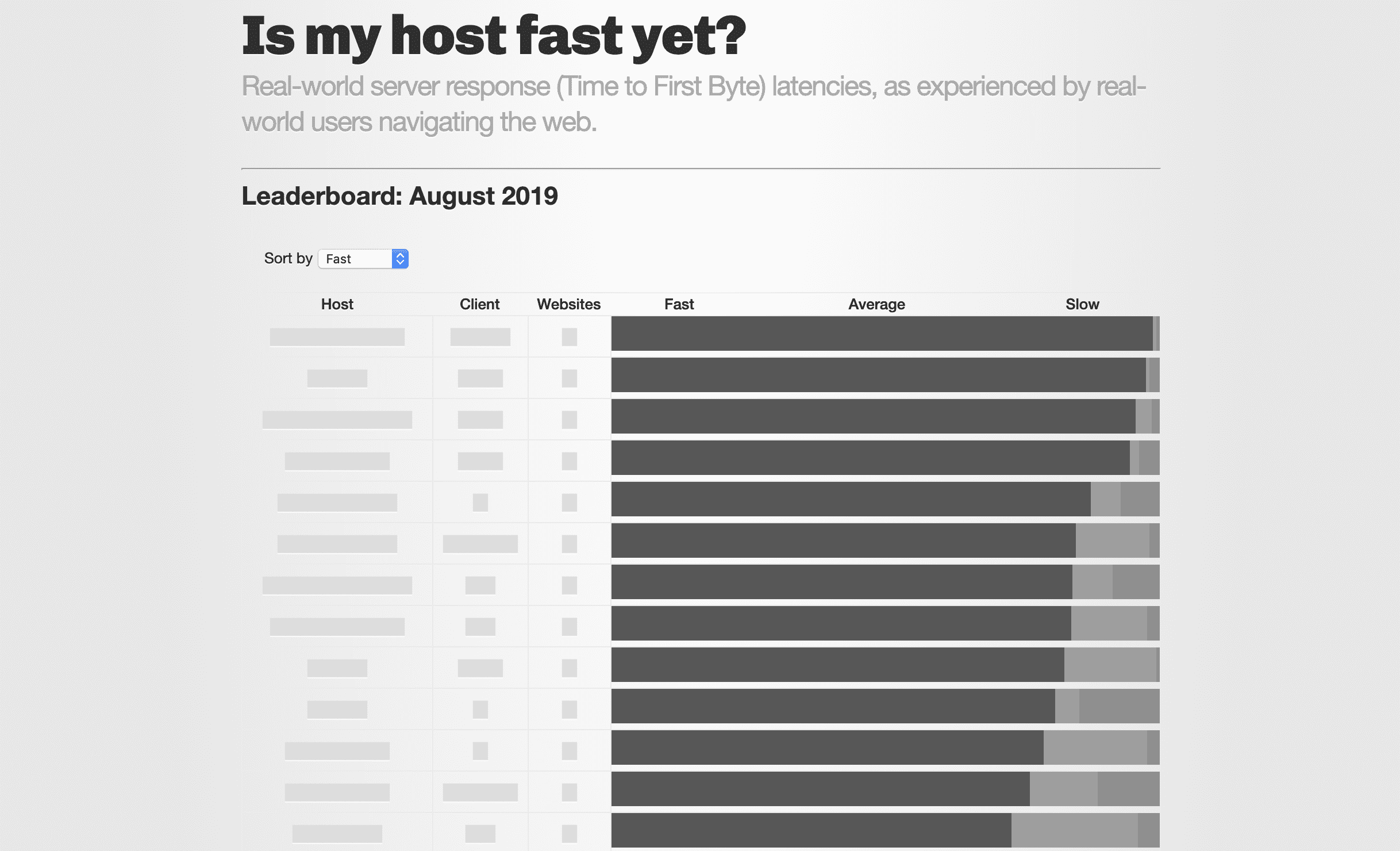

The placeholder data is randomly generated before being sorted. Includes the character "█" repeated a random number of times to create visual placeholders for the text and a randomly generated distribution of the three main values. I also added some styles to desaturate all the colors in the table to make it clear that the data is not fully loaded yet.

The appearance of the placeholders you use does not matter for layout stability. The purpose of placeholders is to assure users that content it is comes and the page is not broken.

This is what the placeholders look like while loading the JSON data:

Abordar el problema de las fuentes web es mucho más sencillo. Debido a que el sitio usa Google Fonts, solo necesitamos pasar el display = swap propiedad en la solicitud CSS. Eso es todo. La API de fuentes agregará font-display: swap style in the font declaration, allowing the browser to render the text in an alternate font immediately. Here is the corresponding markup with the correction included:

<link href="https://fonts.googleapis.com/css?family=Chivo:900&display=swap" rel="stylesheet">Checking the optimizations

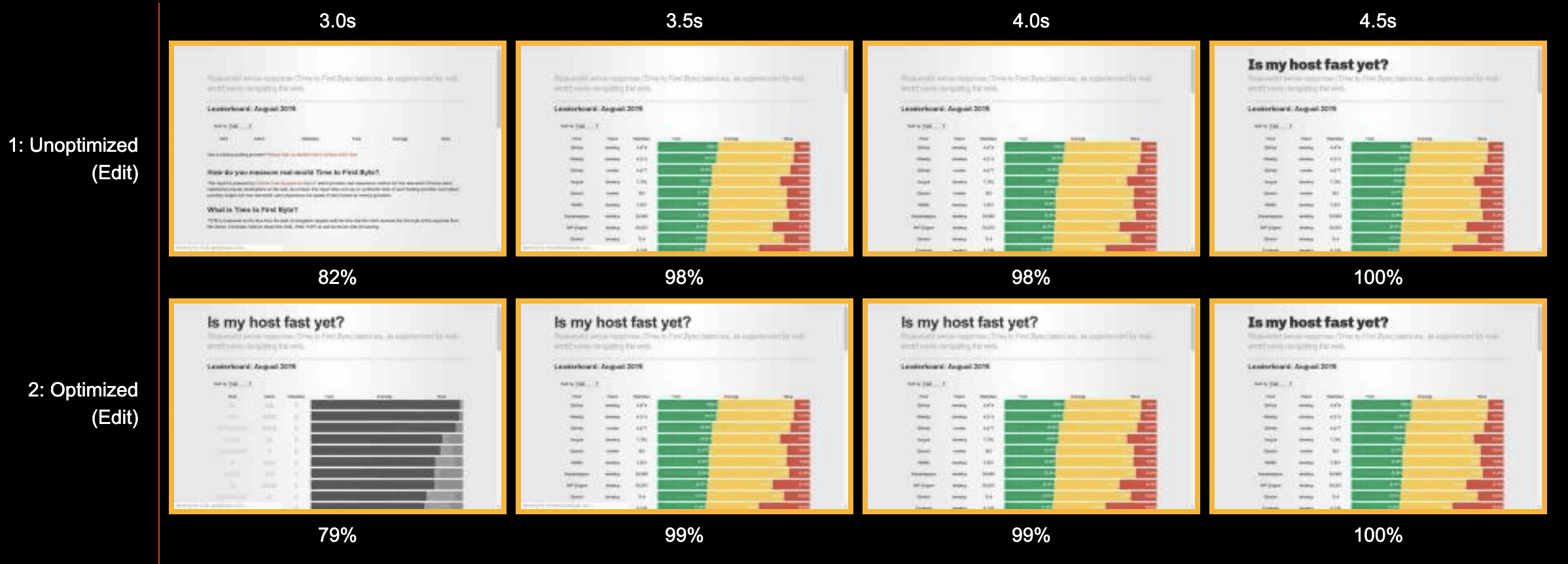

After rerunning the page through WebPageTest, we can generate a before and after comparison to visualize the difference and measure the new degree of design instability:

[

{

"name": "",

"entryType": "layout-shift",

"startTime": 3070.9349999997357,

"duration": 0,

"value": 0.000050272187989256116,

"hadRecentInput": false,

"lastInputTime": 0

}

]According to the custom metric, there is still a design change at 3071ms (roughly the same time as before) but the severity of the change is a lot of lower: 0.005%. I can live with this.

It is also clear from the filmstrip that the <h1> The font immediately reverts to a system font, allowing users to read it earlier.

conclusion

Complex websites will likely experience many more design changes than in this example, but the remediation process remains the same: add design instability metrics to WebPageTest, cross-reference results with visual loading filmstrip to identify the culprits and implement a solution using placeholders to reserve screen space.

(One more thing) Measurement of design instability experienced by real users

It's nice to be able to run WebPageTest on a page before and after an optimization and see an improvement in a metric, but what really matters is that the user experience is improving. Isn't that why we are trying to improve the site in the first place?

So what would be great if we started measuring actual user design jitter experiences alongside our traditional web performance metrics. This is a crucial piece of the optimization feedback loop because having field data tells us where the problems are and if our fixes made a positive difference.

In addition to collecting your own design instability data, see the Chrome UX Report, which includes cumulative design change data from actual user experiences across millions of websites. It lets you know how you (or your competitors) are performing, or you can use it to explore the state of design instability on the web.