Aprenda a perfilar el rendimiento de las aplicaciones Web Audio en Chrome usando chrome://tracing and the WebAudio tab in DevTools.

You have come to this documentation probably because you are developing an application that uses the Web Audio API

and experienced some unexpected glitches, such as noises on the output. You may already be involved in a crbug.com

discussion and a Chrome engineer has asked you to upload "trace data." This guide shows how to get the data so that you can help engineers classify and eventually troubleshoot the problem.

There are two tools to help you create web audio profiles,

chrome://tracing and the WebAudio tab in Chrome DevTools.

When you use chrome://tracing?

When mysterious "failures" occur. Profiling the application with the tracking tools gives you information on:

- Chunks of time spent on specific function calls in different threads

- Audio callback time in timeline view

It usually shows missed deadlines or large garbage collection stops that can cause unexpected audio glitches. This information is useful for detecting an error. Chromium engineers will request trace data if local reproduction of the problem is not possible. Watch The Tracking Event Profiling Tool for general instructions on how to track.

When do you use the WebAudio tongue?

When you want to get an idea of how your application is performing in the real world. DevTools shows you a running estimate of throughput, indicating how the web audio rendering engine is handling rendering tasks with a given rendering budget (for example, approximately 2.67ms at 48KHz). If the capacity is close to 100%, that means your application is likely to crash because the renderer cannot finish the job on the rendering budget.

Use chrome://tracing

How to capture trace data

The instructions written below are for Chrome 80 and later.

For best results, close all other tabs and windows and disable extensions. Alternatively, you can launch a new instance of Chrome

or use other compilations of different launch channels (por ejemplo, Beta o Canary). Una vez que tenga el browser listo, siga los pasos a continuación:

-

Open your application (web page) in a tab.

-

Open another tab and go to

chrome://tracing. -

press the Engrave button and select Manually select settings.

-

press the None buttons on both Record Categories and

Disabled by default categories sections. -

At Record Categories section, select the following:

Audioblink_gchalfv8.execute(if you're interestedAudioWorkletJS code performance)webaudio

-

At Disabled by default categories section, select the following:

audio-worklet(if you are interested in whereAudioWorkletthread begins)webaudio.audionode(if you need the detailed tracking of eachAudioNode)

-

press the Engrave button at the bottom.

-

Go back to your app tab and go through the steps that triggered the problem again.

-

When you have enough tracking data, go back to the tracking tab and press Stop.

-

The follow-up tab will display the result.

-

press Save to save the tracking data.

How to analyze trace data

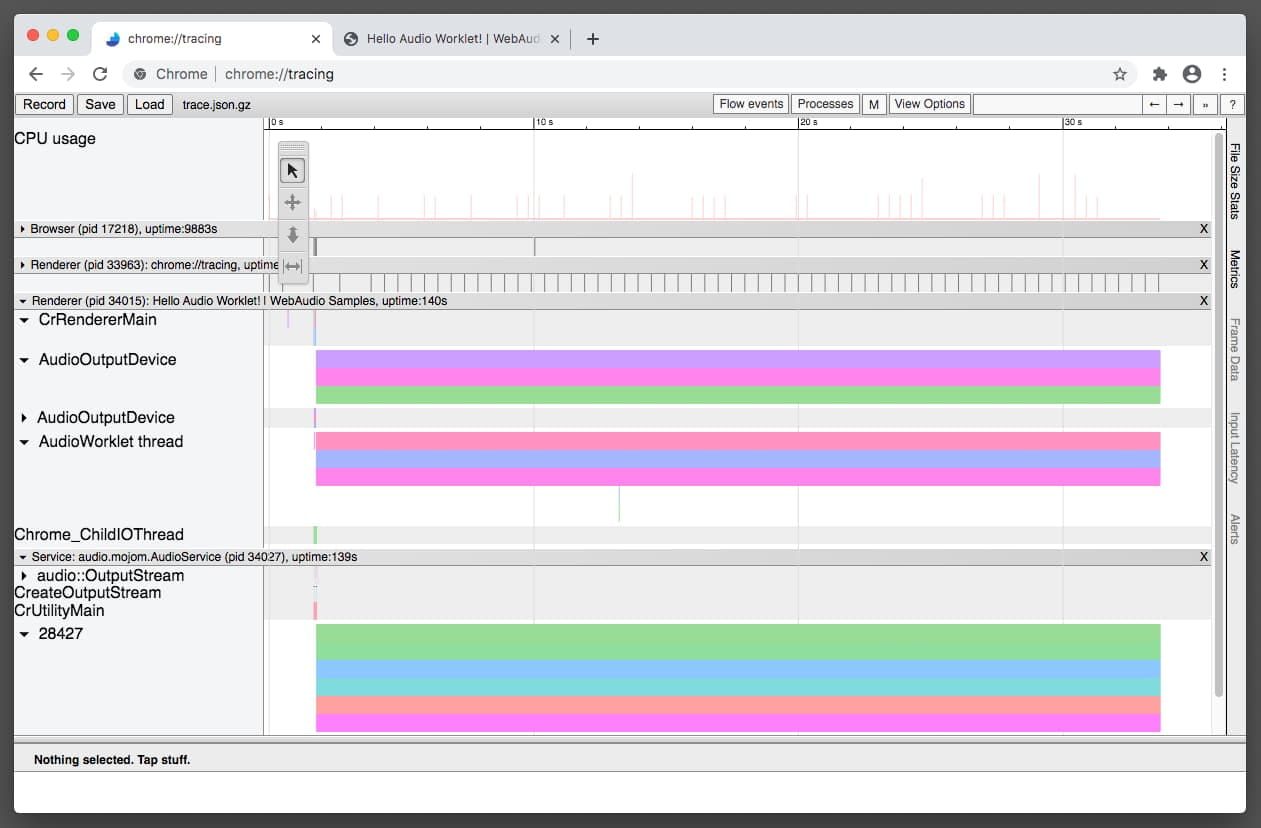

Tracking data visualizes how Chrome's web audio engine processes audio. The renderer has two different rendering modes: Native mode and

Worklet mode. Each mode uses a different threading model, so the tracking results also differ.

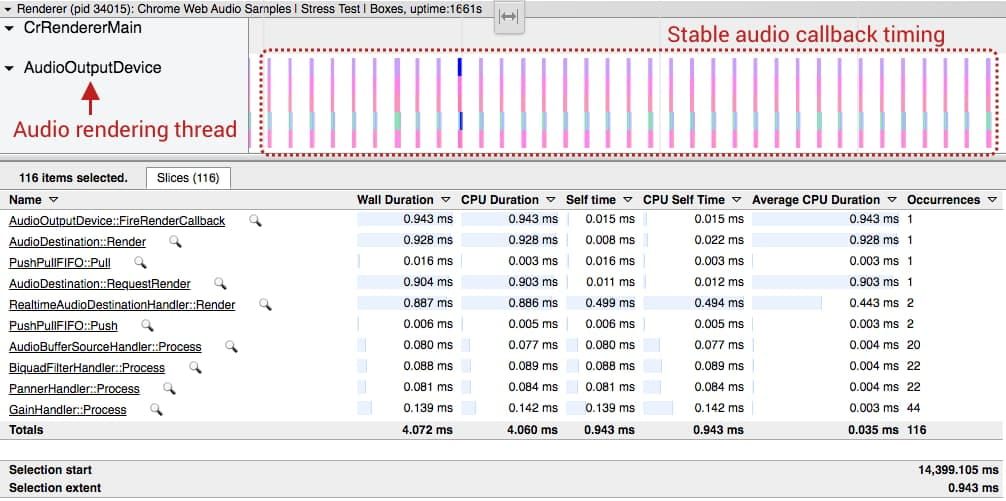

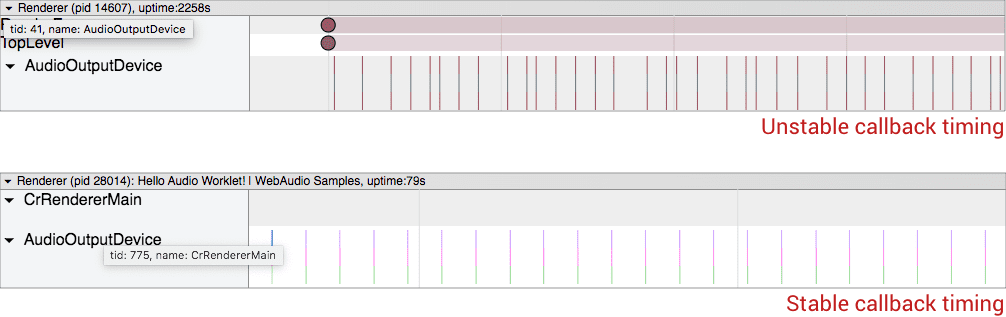

Native mode

In native mode, the AudioOutputDevice thread runs all web audio code. the AudioOutputDevice es un hilo de prioridad en tiempo real que se origina en el servicio de audio del navegador y que es impulsado por el reloj del hardware de audio. Si observa una irregularidad en los datos de seguimiento en este carril, significa que el tiempo de devolución de llamada del dispositivo puede estar nervioso. Se sabe que la combinación de Linux y Pulse Audio tiene este problema. Consulta los siguientes problemas de Chromium para obtener más detalles: # 825823,

# 864463.

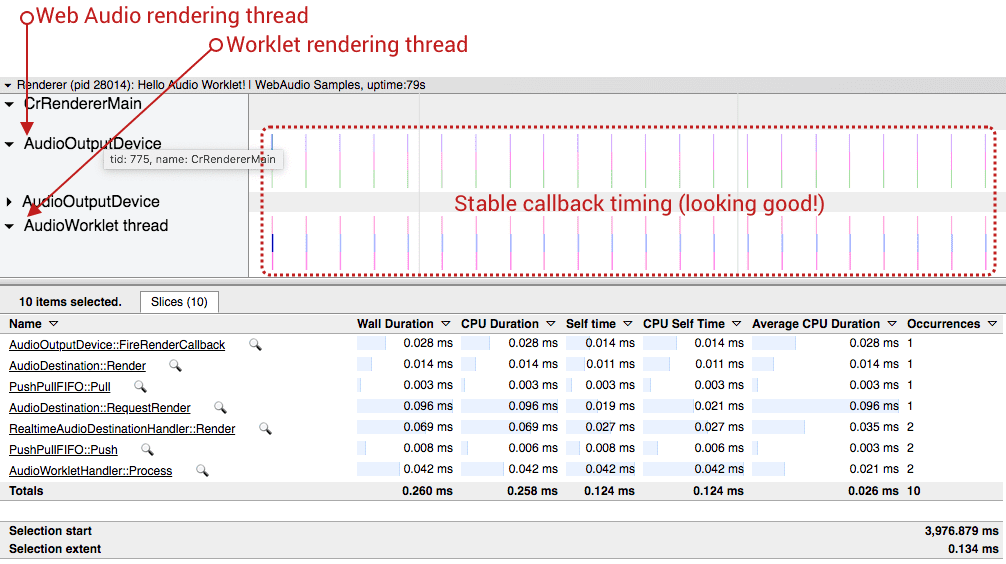

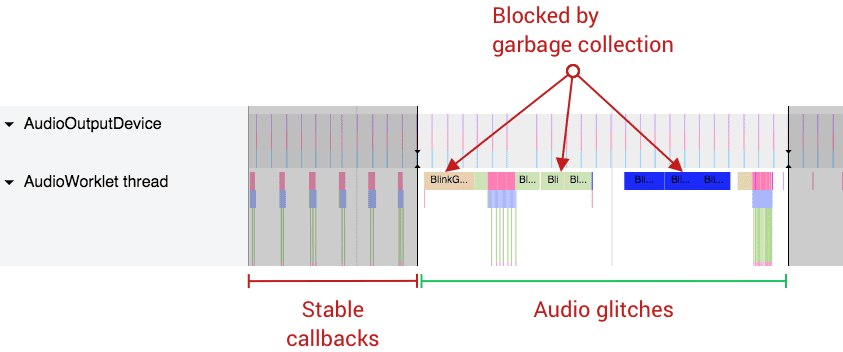

Worklet mode

In Worklet mode, which is characterized by a thread jump from

AudioOutputDevice to the AudioWorklet threadYou should see well aligned strokes on two thread lines as shown below. When the worklet is enabled, all web audio operations are processed by the

AudioWorklet thread. This thread is not currently a realtime priority. The common irregularity here is a large crash caused by garbage collection or missed processing deadlines. Both cases cause failures in the audio transmission.

In both cases, the ideal tracking data is characterized by well-aligned audio device callback invocations and rendering tasks that are completed within the given rendering budget. The two screenshots above are excellent examples of ideal trace data.

Learn from real-world examples

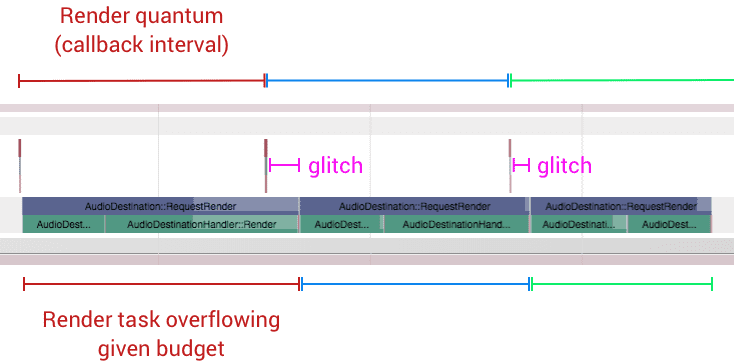

Example 1: rendering tasks that go beyond the rendering budget

The screenshot below (Chromium Problem # 796330) is a typical example of when the code in AudioWorkletProcessor lleva demasiado tiempo y supera un presupuesto de renderizado determinado. El tiempo de devolución de llamada se comporta bien, pero la llamada a la función de procesamiento de audio de la API de Web Audio no pudo completar el trabajo antes de la siguiente devolución de llamada del dispositivo.

Your options:

- Reduce audio graph workload by using less

AudioNodeinstances. - Reduce your code workload on the

AudioWorkletProcessor. - Increase the base latency of

AudioContext.

Example 2: significant garbage collection on the worklet thread

Unlike the native audio rendering thread, garbage collection is handled in the worklet thread. That means if your code does memory allocation / deallocation (for example, new arrays), it eventually triggers a garbage collection that synchronously locks the thread. If the workload for web audio operations and garbage collection is greater than a given rendering budget, audio streaming fails. The following screenshot is an extreme example of this case.

Chrome AudioWorkletProcessor the implementation generates Float32Array instances for the input and output buffer each audio processing callback. This also slowly increases memory usage over time. The team has a plan to improve the design after the related specification is finalized.

Your options:

- Asigne la memoria por adelantado y reutilícela siempre que be posible.

- Use different design patterns based on

SharedArrayBuffer. Although this is not a perfect solution, several web audio applications use a similar pattern with

SharedArrayBufferto run the intensive audio code. Examples:

Example 3: Nervous audio device callback from AudioOutputDevice

Accurate timing of the audio callback is the most important thing for web audio. This should be the most accurate clock in your system. If the operating system or its audio subsystem cannot guarantee a robust callback time, all subsequent operations will be affected. The image below is an example of an edgy audio callback. Compared to the previous two images, the interval between each callback varies significantly.

This is a known issue in Linux, which uses Pulse Audio as the audio backend. This is still under investigation (Chromium problem no. 825823).

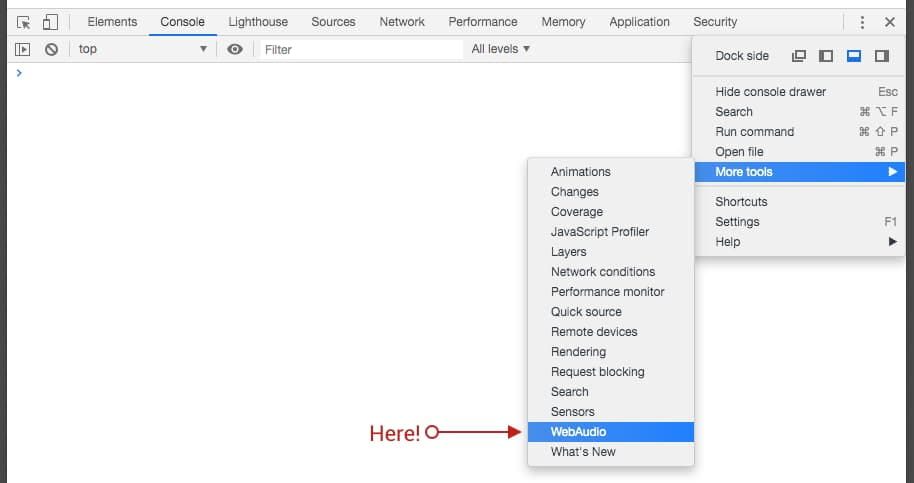

You can also use the DevTools tab designed specifically for web audio. This is less comprehensive compared to the tracking tool, but it is useful if you want to measure the execution performance of your application.

Access the panel by opening the Main menu from DevTools, then go to More tools > WebAudio.

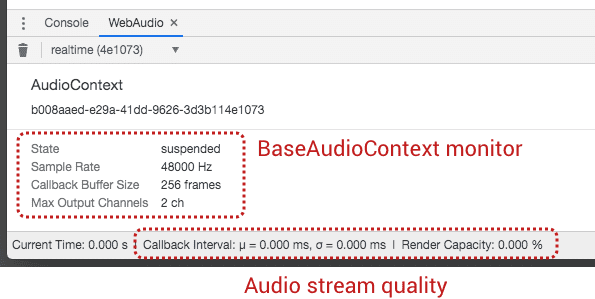

This tab displays information about running instances of BaseAudioContext. Use it to see the performance of the web audio renderer on the page.

Since a page can have multiple BaseAudioContext instances, the Context selector

(which is the dropdown that says realtime (4e1073) in the last screenshot), allows you to choose what you want to inspect. The inspector view shows the properties (for example, sample rate, buffer size, channel count, and context status) of a BaseAudioContext instance you select, and it changes dynamically when properties change.

The most useful thing in this view is the status bar at the bottom. It is only active when the selected BaseAudioContext is a AudioContext, which runs in real time. This bar shows the quality of the instant audio transmission of a AudioContext and updates every second. Provide the following information:

- Callback interval (ms) - Displays the weighted mean / variance of the callback interval. Ideally, the mean should be stable and the variance should be close to zero. Otherwise, the audio infrastructure of the operating system could have problems in deeper layers.

- Rendering Capability (percentage): follow this formula: (time spent on actual rendering / instant callback interval) × 100. When the capacity is close to 100 percent, it means that the renderer is doing too much for a given rendering budget, so you should consider doing less in your web audio code.

You can manullay and activate a garbage collector by clicking the trash can icon.

conclusion

Audio debugging is difficult. Debugging audio in the browser is even more difficult. However, these tools can ease the pain by providing you with useful information on how web audio code works. In some cases, the web audio may not behave as it should; then don't be afraid of

file a bug in Chromium Bug Tracker. While filling in the information, you can follow the guide above and submit the trace data you captured with a reproducible test case. With this data, Chrome engineers will be able to correct your error much faster.

Photo by Jonathan Velasquez in Unsplash